Use seed to regenerate the same sampling multiple times. In order to do sampling, you need to know how much data you wanted to retrieve by specifying fractions. In summary, PySpark sampling can be done on RDD and DataFrame. TakeSample(self, withReplacement, num, seed=None) Returning too much data results in an out-of-memory error similar to collect().

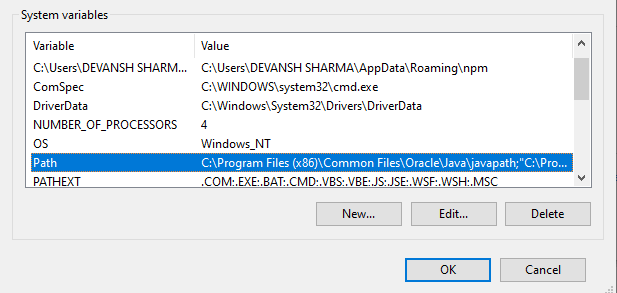

PYSPARK UUID GENERATOR DRIVER

RDD takeSample() is an action hence you need to careful when you use this function as it returns the selected sample records to driver memory. val dfWithUniqueId df.withColumn('uniqueid', monotonicallyincreasingid()) Remember it will always generate 10 digit numeric values even if you have few. Print(rdd.sample(True,0.3,123).collect()) As an example, consider a Spark DataFrame with two partitions, each with 3 records. Sample(self, withReplacement, fraction, seed=None)īelow is an example of RDD sample() function Sample() of RDD returns a new RDD by selecting random sampling. Since I’ve already covered the explanation of these parameters on DataFrame, I will not be repeating the explanation on RDD, If not already read I recommend reading the DataFrame section above. PySpark RDD sample() function returns the random sampling similar to DataFrame and takes a similar types of parameters but in a different order. PySpark RDD also provides sample() function to get a random sampling, it also has another signature takeSample() that returns an Array. If a stratum is not specified, it takes zero as the default.įractions – It’s Dictionary type takes key and value. It returns a sampling fraction for each stratum. You can get Stratified sampling in PySpark without replacement by using sampleBy() method. On first example, values 14, 52 and 65 are repeated values. Print(df.sample(True,0.3,123).collect()) //with Duplicates By using the value true, results in repeated values. Some times you may need to get a random sample with repeated values. 1.3 Sample withReplacement (May contain duplicates) Here, first 2 examples I have used seed value 123 hence the sampling results are the same and for the last example, I have used 456 as a seed value generate different sampling records. Change slice value to get different results.

To get consistent same random sampling uses the same slice value for every run. 1.2 Using seed to reproduce the same Samples in PySparkĮvery time you run a sample() function it returns a different set of sampling records, however sometimes during the development and testing phase you may need to regenerate the same sample every time as you need to compare the results from your previous run.

0 kommentar(er)

0 kommentar(er)